|< << 2 of 3

>> >|

13 December 2013 ·

about a minute

read · Comments

exocortexmetta

Our lives are increasingly mediated by, filtered through, and experienced via technology. A plethora of mechanisms capture, store, examine, manipulate, derive intelligence from, and share our information. This happens either with our knowledge, consent, and intent or without.

Aral Balkan site

30 October 2013 ·

11 minutes

read · Comments

communicationhowtostructured-secure-streamsuvvy

Introduction

Structured Secure Streams provide secure encrypted and authenticated data connection between endpoints. It's a simple userspace library written in c++14 and boost. It uses standard UDP to provide reliable delivery, multiple streams, quick connection setup, end-to-end connection encryption and authentication.

SSS is based on experimental, unfinished project under UIA - SST.

SSS is an experimental transport protocol designed to address the needs of modern applications that need to juggle many asynchronous communication activities in parallel, such as downloading different parts of a web page simultaneously and playing multiple audio and video streams at once.

Read more →

25 October 2013 ·

6 minutes

read · Comments

peer-to-peerrouting

The problem

Absolutely every network needs a way to find a node's peers, for establishing connections, propagating updates and maintaining network integrity this is essential.

Overlay networks based on DHT or similar mechanisms (Kademlia, Chord) use peer nodes to figure this information out. Usually in such network you have a node ID, 128 or 160 bits in length, which uniquely identifies the node and node's position in routing tables. Based on this ID you simply look up the IP address. But wait, look it up from where?

Read more →

7 October 2013 ·

2 minutes

read · Comments

mettareportstructured-secure-streamsuvvy

Structured Secure Streams library is getting in shape. With C++11 and Boost it's relatively simple to write even without the tremendous help of Qt. Although I had to write some helpers which are factored into a separate library - grab arsenal if you want - some nice things there are byte_array, settings_provider, binary literals, hash_combine, make_unique, contains() predicate, base32 encoder-decoder and opaque big/little endian integer types.

For example:

big_uint32_t magic = 0xabbadead;

// magic will store data in network byte order in memory,

// and convert it as necessary for operations. You don't

// have to think about it at all.

int bin_literal = 1010101_b;

int flags = 00001_b | 10100_b;

int masked = bin_literal & 01110_b;

byte_array data{'h','e','l','l','o'};

auto hell = data.left(4);

// And so on...

But enough about support libs, one of the major milestones is the ability to set up an encrypted connection between two endpoints. This works reliably in the simulator, which is another good feature. In the simulator you can define link properties, such as packet propagation delay, loss rate between 0.0 and 1.0, set up host network of arbitrary complexity. It currently requires manual set up of every link between the hosts, but I hope to simplify that a bit using some network configuration helpers.

With an encrypted connecting set up, streams are firing off events on receive or substream activation. There's still a lot to do, for example proper MTU configuration, congestion control, reliable delivery timeouts and lots of small fixes, but I plan now to switch to porting the userspace applications to use SSS streams and then flesh out the issues with a bunch of unit and integration tests.

First target is opus-streaming app from uvvy. It's a simple audio-chat application and switching it to SSS streams serves two purposes - I want to see how well SSS can handle real-time traffic and part two, I haven't polished unreliable datagram sending too much, and this is what opus-stream uses, so it will serve as a field test.

8 September 2013 ·

2 minutes

read · Comments

mettareportstructured-secure-streamsuvvy

To continue work on the structured streams transport I decided to get rid of the XDR data representation, as well as slightly awkward boost.serialization library.

Read more →

23 May 2013 ·

2 minutes

read · Comments

clangcompilershowtollvm

Just a very simple thing to try and build Clang with polly, LLVM, libcxx, lldb and lld from trunk. Isn't it?

Using git, because cloning a git repo with full history is still faster than checking out svn repo with serf. Yay!

These instructions are not for copy-paste, they show general idea and should work with minor changes.

Read more →

24 April 2013 ·

about a minute

read · Comments

mettastructured-secure-streamsuvvy

In the mean time, I'm slowly rewriting Bryan Ford's SST (Structured Streams Transport) library, using modern C++ and boost.asio, in the hopes that it will be easier to port to Metta. I called it libsss (Structured Secure Streams).

As this work progresses I also plan to enter the description of this protocol into an RFC document, so there will be some reference point for alternative implementations. Current specification progress is available in libsss repo on github.

I'd like to take the chance to thank Aldrin D'Souza for his excellent C++ wrapper around openssl crypto functions. He kindly licensed it for free use under BSD License.

update: Oct 2014 repository moved.

21 March 2013 ·

2 minutes

read · Comments

content-distributionfile-sharinguvvy

Some issues that need tackling in design of file sharing (see Brendan's post here):

The issue of trust: right now, the file is only distributed across a range of devices you manually allow to access your data. This doesn't solve the problem per se, but just makes it easier to tackle for the initial implementation. The data and metadata could be encrypted with asymmetric schemes (private keys), but that doesn't give full security.

The issue of overhead: using automatic deduplication on a block level (if people share the same file using the same block size, chances are all the blocks will match up, and hence need to be stored only once. If there are minor modifications, then only some blocks would mismatch while other are perfectly in sync, and this means much less storage overhead).

Redundancy: This also gives possibility to spread out the file blocks to other nodes more evenly, with an encoding scheme allowing error correction file may be reconstructed even if some of its blocks are lost completely.

Plausible deniability: if your file is not stored in a single place as a single blob, it becomes much harder to prove you have it.

File metadata (name, attributes, custom labels) is also stored in a block, usually much smaller in size, which can be unencrypted to allow indexing, but could also be encrypted if you do not want to expose this metadata. In my design metadata is a key-value store with a lot of different attributes ranging from UNIX_PATH=/bin/sh to DESCRIPTION[en]="Bourne Shell executable" to UNIX_PERMISSIONS=u=rwx,g=rx,o=rx and so on. This format is not fixed, although it follows a certain schema/onthology. It allows "intelligent agents" or bots to crawl this data and enrich it with suggestions, links, e.g. a bot crawling an mp3 collection and suggesting proper tags - it could also find higher quality versions of the file, for example.

All this revolves around the ideas of DHTs, darknets, netsukuku and zeroconf. Still early on in the implementation to uncover all the details - they might change.

24 December 2012 ·

3 minutes

read · Comments

assocfscontent-addressable-storagegit-modelmettanon-hierarchical-filesystem

While I'm still dabbling with fixing some SSS issues here and there I thought I'd post an old excerpt from assocfs design document.

It's a non-hierarchical filesystem - in other words, associative filesystem. It's basically a huge graph database. Every object is addressed by its hash (content addressable, like git), knowing the hash you can find it on disk. For more conventional searches (for those who does not know or does not care about the hash) there is metadata - attributes, drawn from an ontology and associated with a particular hashed blob.

This gives a few interesting properties:

- Same blobs will end up in the same space, giving you a for-free deduplication.

- Implementing versioning support is a breeze - changing the blob changes the hash, so it will end up in some other location.

- Some other things you may easily imagine.

It also has some problems:

- No root directory, but a huge attribute list instead. This requires some efficient search and filtering algorithms as well as on-disk and in-memory compression of these indexes. Imagine 1,000,000 "files" each with about 50 attributes. Millions and millions of attributes which you have to search through.

Luckily, databases are a very well established field and building an efficient storage and retrieval on this basis is possible.

As a user you basically perform searches on attribute sets using something like humane representation of relational algebra. Blobs can have more conventional names, specified with extra attributes, for example: UNIX_PATH=/bin/bash UNIX_PATH=/usr/bin/bash allows single piece of code to be addressed by UNIX programs as both /bin/bash and /usr/bin/bash, without needing any symlinks.

You can assign absolutely any kind of attributes to blobs, the actual rules for assigning are specified in the ontology dictionary, which is part of the filesystem and grows together with it (e.g. installed programs may add attribute types to blobs). Security labels are also assigned to blobs that way.

Attributes "orient" blobs in filesystem space - without attributes the blobs are practically invisible, unless you happen to know their hash exactly. They also form a kind of semantic net between blobs giving a lot of information about their semantical meaning to the user and other subsystems.

Since recalculating hashes for entire huge files would be troublesome, the files are split up in smaller chunks, which are hashed independently and collected into a "record" object, similar to a git tree. Changing one chunk therefore requires rehashing only two much smaller objects, rather than entire huge file.

Changes to the filesystem may be recorded into a "change" object, similar to a git commit, which may be cryptographically signed and used for securely syncing filesystem changes between nodes.

9 December 2012 ·

about a minute

read · Comments

natreportuvvy

Turns out the problem was on the server side setup. After moving the server to Amazon EC2 cloud and setting up UDP firewall rules punching started working. At least that takes some burden off my shoulders. The regserver connection is not very robust, that should probably be modified to force-reconnect the session once you open the search window again.

UPnP has interesting effect on Thomson TG784 - all UDP DNS traffic ceases on other machines, rendering name resolution unusable, unless I force it to use TCP. Not yet sure if this is result of my incorrect use of it or this is by design in Thomson. Skype and uTorrent seem to punch holes just fine, so it should be me. For now I just turned UPnP off in the released code and will experiment with it more.

update: As of Jan 2014 NAT access is working properly.

1 December 2012 ·

about a minute

read · Comments

natreportuvvy

There's a slight fault with uvvy not quite punching through home routers' NAT. While the UDP punching technique described by Bryan Ford should generally work, it doesn't account for the port change hence the announced endpoint addresses as seen by the regserver are invalid. Responses don't go back because the reply port number is different from what router's NAT assigns.

I tried using UPnP to open some more ports, but it doesn't change the fact that advertised endpoints are still invalid. Now the upcoming change is to record external IP and port of the instance as reported by the router's UPnP protocol into yet another endpoint and forward that to the regserver. Another nice addition would be to enable Bonjour discovery of the nodes on the local network, which hopefully would already be connected to the regserver and can forward our endpoint information.

As usual, on the New Years Eve there's a lot of different projects coming up simultaneously and grinding any progress to a halt. Watch the commits on github.

update: As of Jan 2014 NAT access is working properly.

15 August 2012 ·

about a minute

read · Comments

ansadistributed-architectureeventsfawnmettanemesisreportsynchronisation

I've ported events, sequencers and event-based communication primitives from Nemesis. It's a little bit messy at the moment (mostly because of mixing C and C++ concepts in one place), but I'm going to spend the autumn time on cleaning it up and finishing the dreaded needs_boot.dot dependencies to finally bootstrap some domains and perform communication between them. Obviously, the shortest term plan is timer interrupt, primitive kernel scheduler which activates domains and events to move domains between blocked and runnable queues.

There's some interesting theory behind using events as the main synchronization mechanism, described here in more detail.

For the vacation time I've printed some ANSA documents, which define architectural specifications for distributed computation systems and is very invaluable source of information for designing such systems. The full list of available ANSA documents can be found here (link is dead now). Good reading.

16 July 2012 ·

about a minute

read · Comments

issue-trackingmettatools

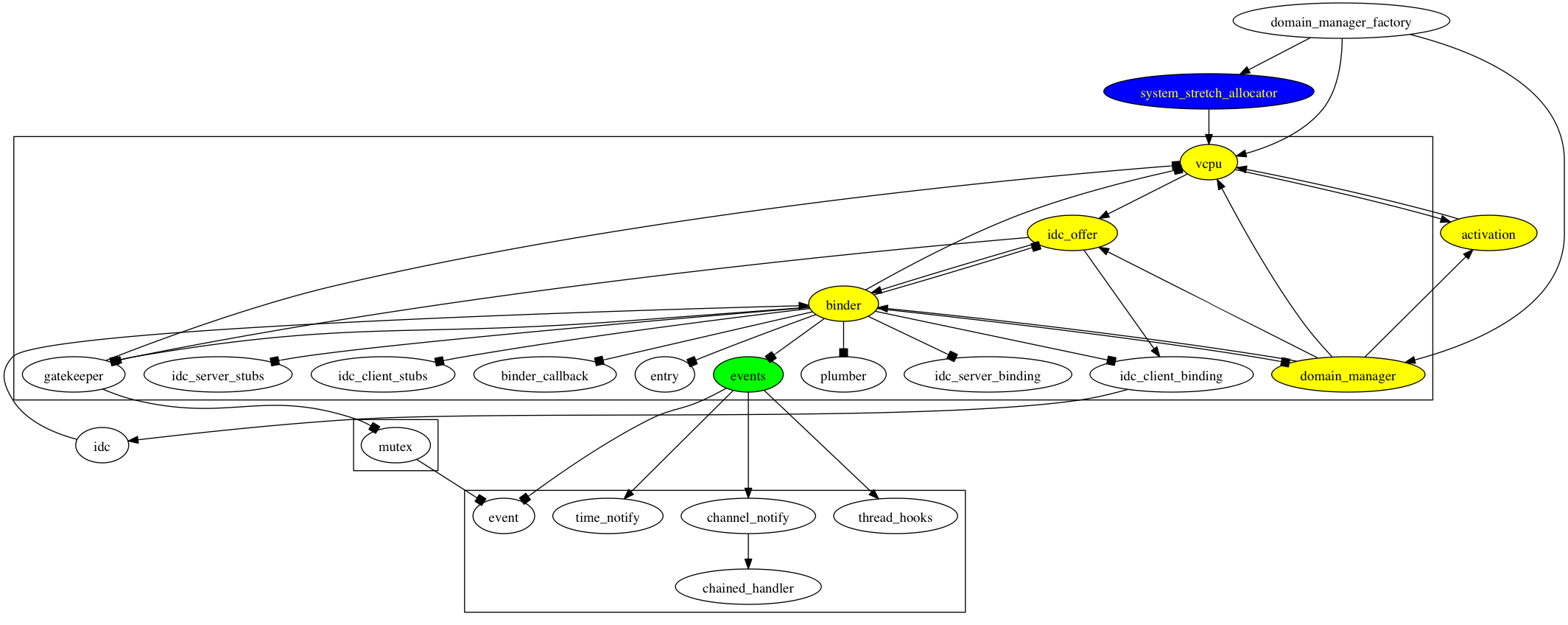

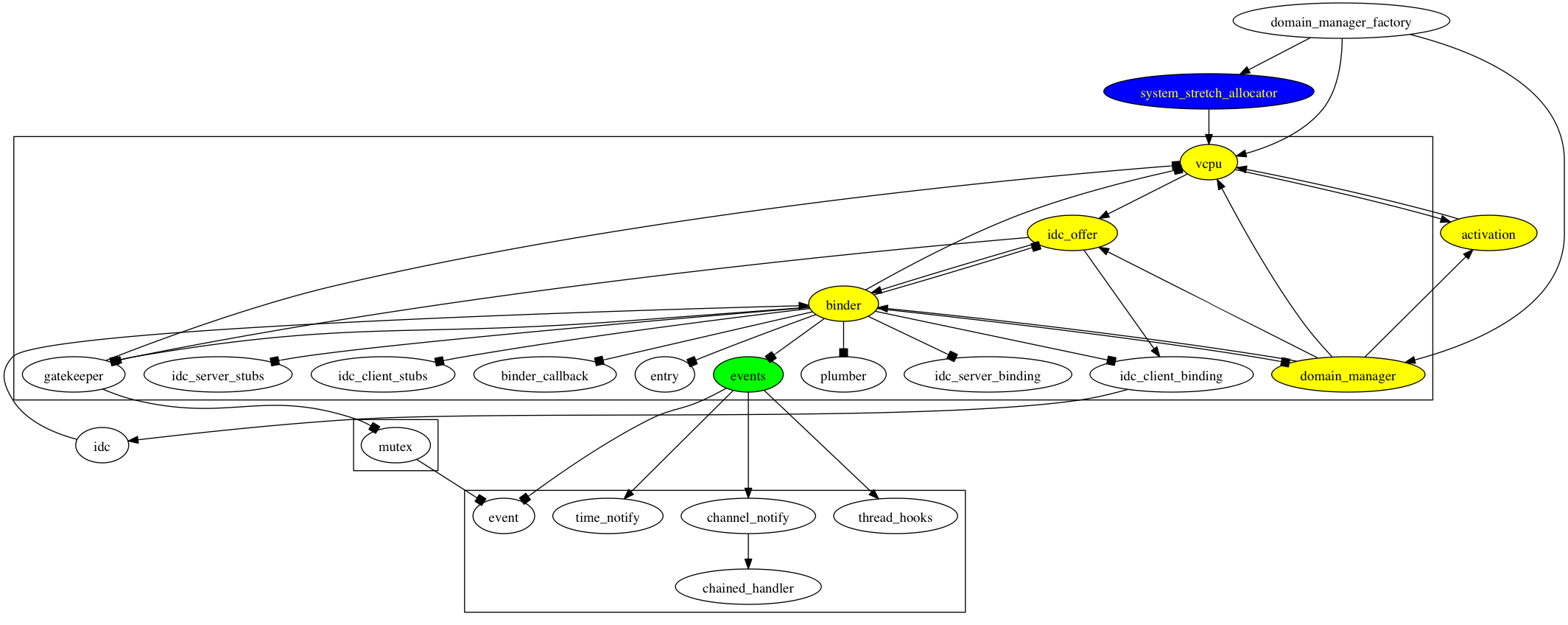

I needed to quickly check how much of Nemesis support has to be ported over before I can start launching some basic domains.

I used a simple shell one-liner to extract NEEDS dependencies from the interface files. It's easy to do in Nemesis because of explicit NEEDS clause in each interface (would be nice to add this functionality to meddler, it also has the dependency information available).

Here's the shell one-liner:

echo "digraph {"; find . -name *.if -exec grep -H NEEDS {} \; | grep -v "\-\-" |

sed s/ *NEEDS //g | sed s@^\./@@ | sed s/\.if// | awk -F: {print $1, "->", $2}; echo "}"

This generated a huge graphic with all dependencies, which I then filtered a bit by removing unreferenced entities and culling iteration after iteration.

The resulting graphic is much smaller and additionally has a hand-crafted legend (green - leaf nodes, yellow - direct dependencies of DomainMgr and VP, my two interfaces of interest). This shows I need to work on about 10-12 interface implementations to be able to run domains.

And my ticket tracker of choice, bugs-everywhere now has an entry 7df/0fe 'Generate dot files with dependency information in meddler'. Time to sleep.

13 July 2012 ·

about a minute

read · Comments

clangllvmmettareporttoolchaintype-system

I've been working on toolchain building script, now at least on Macs it's possible to build a standalone toolchain for building Metta and you can download it and try to build it yourself. All necessary details are descibed on SourceCheckout wiki page. There is followup work to remove dependency on binutils and gcc (gcc will probably go first, then once lld is mature enough I could get rid of ld/gold).

Another update is about type system. The operations on type system are implemented now, I can successfully register type information and query it - some examples of that are in the recently released iso image R925. Next up is fixing some of naming context operations so I can actually create and operate hierarchical naming contexts.

14 June 2012 ·

2 minutes

read · Comments

codingintrospectionmettareflectionself-awarestartup

Since I've decided to approach the system development from both low-level and high-level perspectives, one of the applications I have in mind for demo purposes is a little console tool which lets you activate various parts of the system, list available services and call operations on available interfaces.

Imagine a little tool that allows you to pick a video file, seek it to a particular time and play it frame by frame, then run face recognition on each frame and make a database of recognized faces. Being able to make such applications "mashup style" by just fiddling with text and pictures in the command line should enable people to create more and more interesting tools from the basic building blocks presented by the system.

This tool would need to inspect installed interfaces and types of the running components and be able to construct calls to these components directly from the command line. This requires introspection, or the ability to describe structure of objects in the system.

At the moment I'm working on the extension of meddler that allows to generate introspection data from the interface IDL. It is generally simple and then the next step would be to somehow register this information in the system when a new interface type is introduced. This is harder and requires some design effort. In the first approach of course only boot image is loaded, so registering types is very simple.

Next up is actual introspection interface - how to know what format a particular data type is and how to marshal/unmarshal it for the purpose of interchange and operations calls on interfaces.

See this little script for the possible demo storyboard.

|< << 2 of 3

>> >|